Removing ansi gobbledygook from fly machines output

fly machines status --display-config MACHINE_ID | awk 'BEGIN {c=0} /Config:/ {c=1;next} {gsub(/[^\11\12\15\40-\176]/,"")} {gsub(/\[[0-9]{1,2}m/,"")} {if (c) print}' | jq .

fly machines status --display-config MACHINE_ID | awk 'BEGIN {c=0} /Config:/ {c=1;next} {gsub(/[^\11\12\15\40-\176]/,"")} {gsub(/\[[0-9]{1,2}m/,"")} {if (c) print}' | jq .

This Share Python scripts like a pro article shows a way to use uv to store dependency information within a script (those single-file custom-purpose ones that are so common) and leverage uv’s speed and caching to provision dependencies at runtime.

In short, uv add --script your-script.py the-dependency. These get added as a comment in the script and then uv run your-script.py will ensure dependencies are installed and available.

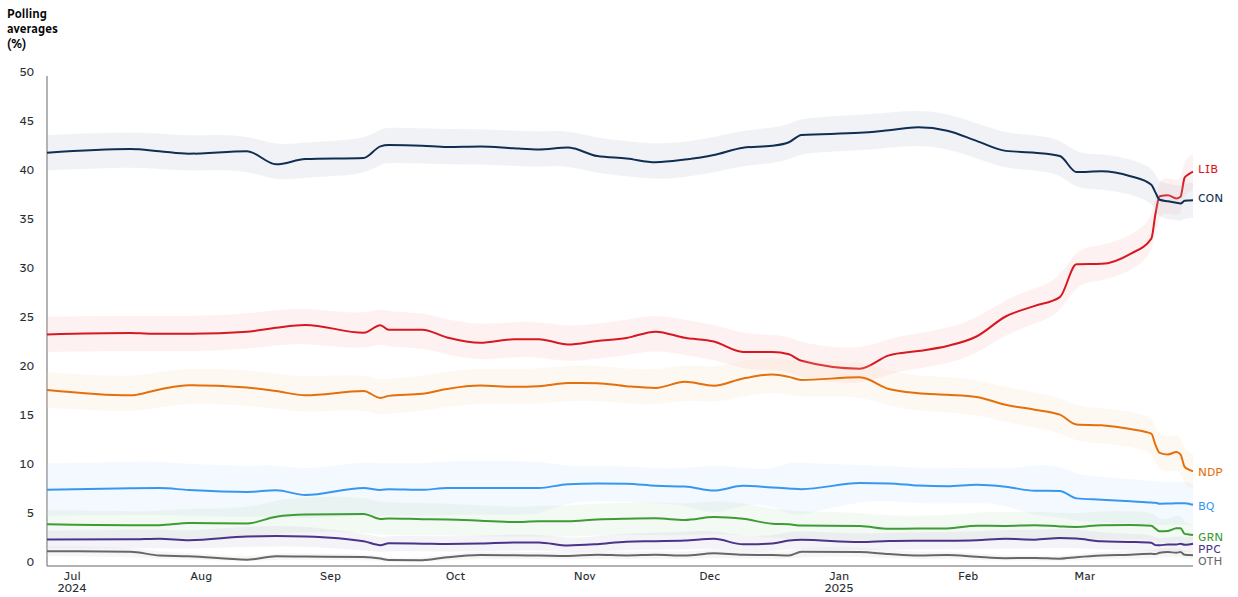

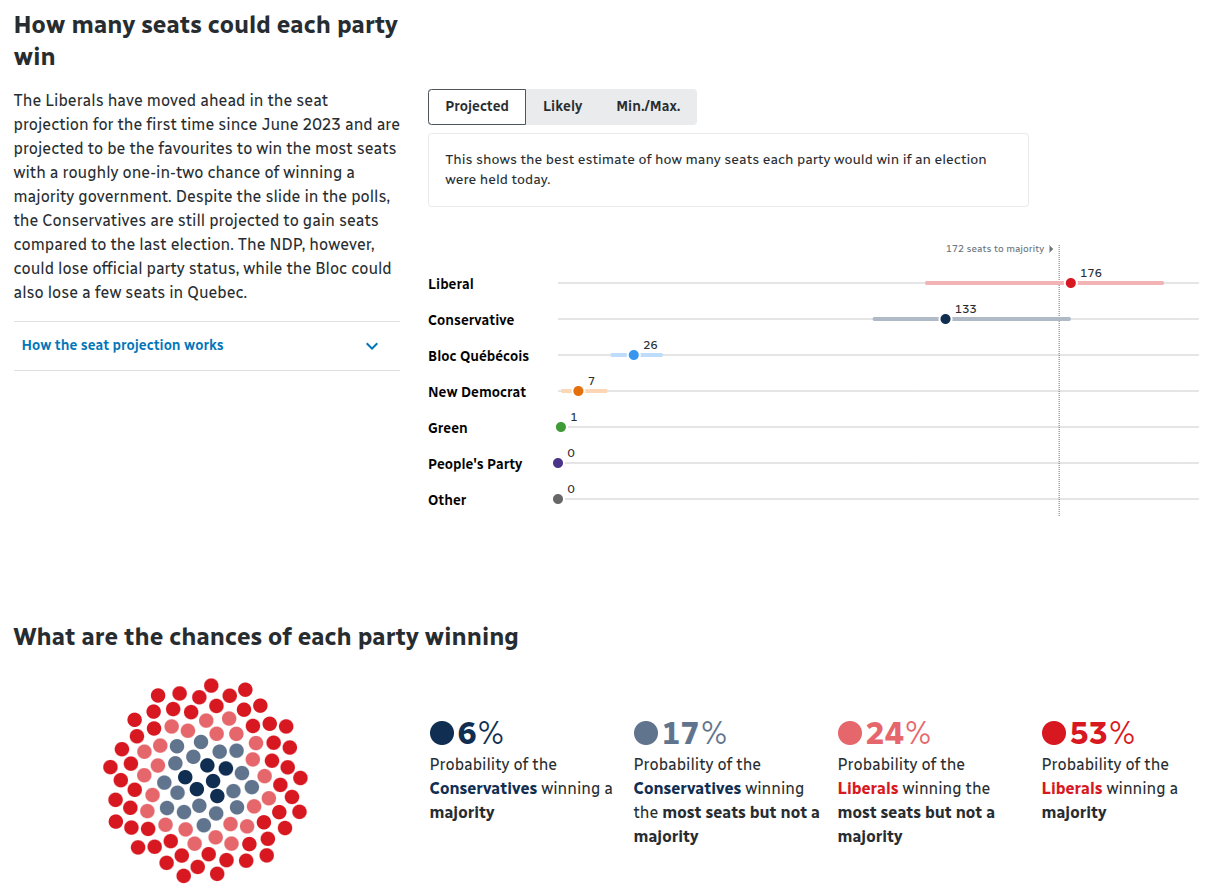

What this graph shows is that: People didn’t really “want” Pierre Poilievre; they just didn’t “want” Justin Trudeau anymore. The moment the Liberal party offered another choice of leader, everybody flocked away from “Adolf” Poilievre. That’s why most of his discourse since then has been running around waving his arms and whining “hey! I can do it too! remember me?”. Neither him, nor his party, are actually “wanted” by people. I don’t think they have a chance while they have such a radical ultra-right-wing leader.

Glad to see people are starting to come around to: the Conservatives are NOT an option, not with “Adolf” Poilievre at the helm.

the python world is abuzz with the wonders of uv. I find it quite clunky - I’m starting to look into it for the speed which is indeed awesome but this was incredibly unintuitive.

I had:

python3 -mvenv env

env/bin/pip install --no-cache-dir --upgrade -r requirements.txt

My first try to uv-ize this was

uv venv env

uv pip install --prefix env --no-cache-dir --upgrade -r requirements.txt

This breaks because stuff installed in the venv refers to the system Python interpreter /usr/bin/python3.12 and not the one installed in the venv.

WHY??? Why doesn’t it use env python?

Turns out one should not use --prefix as the docs state

prefer the use of –python to install into an alternate environment, as scripts and other artifacts installed via –prefix will reference the installing interpreter

Now you tell me. OK, how to make uv pip refer to the venv I created in env? uv pip install --python 3.12 --prefix env, right?

WRONG! This still doesn’t properly rewrite the shebang in executables in the virtualenv.

The docs do say uv will work on the environment pointed to by VIRTUAL_ENV if specified, so it turns out the arcane invocation to do what I needed was:

uv venv env --python 3.12

VIRTUAL_ENV=env uv pip install --no-cache-dir --upgrade -r requirements.txt

This works and if one squints a bit, all this info is in the documentation but it’s very undiscoverable and the breadcrumbs will lead you to all sorts of different places. I ended up cobbling the above together by also looking at this blog article from saaspegasus.

Just for kicks, let’s see what an AI would say here.

hi chatgpt. I have these commands to set up a virtual environment and install some dependencies:

python3 -mvenv env env/bin/pip install –no-cache-dir –upgrade -r requirements.txt

How can I use the uv utility to create my virtual environment in the env directory and install dependencies listed in requirements.txt? I want uv equivalents of the above two commands please.

The response: (spoiler: it’s wrong):

the equivalent uv commands for your original commands would be:

uv env create –path env uv pip install –no-cache-dir –upgrade -r requirements.txt

I was mean to chatgpt. Asked it to solve a problem I already knew the solution to. Have to admire the confidence but it got the answer wrong - and wrong again even after I corrected the initial error. So I remain unimpressed by AI’s inability to replace me.

I updated a Docker container’s ENTRYPOINT to “/bin/sleep inf” but when I start it, I get this error: “/bin/sleep: invalid time interval ‘start’”. How to fix it?

The issue you’re facing arises because the sleep command expects a valid time duration, and inf isn’t a recognized valid duration format in many versions of sleep

actually it’s because there’s also a CMD defined in the Docker image. (note: at this point it’s useless to use AI, I’ve already figured out the solution).

Ah, I see! The issue arises because ENTRYPOINT and CMD are used together in Docker, and they can conflict if not set correctly. When both ENTRYPOINT and CMD are defined, the CMD provides default arguments to the ENTRYPOINT. However, if CMD is defined as “/bin/sleep inf”, and ENTRYPOINT is also /bin/sleep, Docker tries to execute /bin/sleep with the argument inf, which causes the error you’re seeing.

(yes but the error indicates that the CMD is “start”. Whatever, at this point I’ve wasted more time playing with the AI than doing the actual work. At least I got a fun blog post out of the interaction.

Roblox is full of dark patterns intended to trap and ensnare kids to spend money - they care about nothing else. Their communications insist on the contrary, example:

“Roblox aims to create a safe platform for users by providing parents with visibility and control over their child’s experience”

This is a blatant lie. If they actually cared about empowering parents to control the experience they would provide one crucial feature: ability to block specific games so the kid can’t play them. But this is not possible. Instead the only way to control content is to use the “content maturity” thing they provide. However this is next to useless because a lot of the content is mis-classified. The lowest category, “minimal” is something I would expect to be able to give to a 5-year-old without reservations. But “minimal” maturity games include:

As a parent keeping a close eye on what the kid is doing I notice these things but the platform leaves me powerless to prevent kids from being exposed to this, short of a complete Roblox ban.

It’s clear to anyone that Roblox doesn’t really care about child safety in their cursed platform.

Whenever market bubbles burst one always talks of a “correction” as a healthy and common feature of the capitalist boom/bust cycle. I wonder why s “correction” always works going down / erasing value and it absolutely never works in favour of those who usually lose money (investors!) - in other words why is a correction a “sorry you got screwed” event and not a “hey you all are suddenly rich” one.

Wonders of capitalism.

In light of Musk’s responses to the recent petition to strip his Canadian citizenship; the article says there are no grounds to remove citizenship unless there was misrepresentation when it was obtained. However, there is precedent for going back and reviewing how citizenship was obtained, as seen in 2024 for an ISIS terrorist with Canadian citizenship. Terrorism is a serious offence; so is treason, which is what Musk is doing. I think it’s fair to at least launch a review of the circumstances through which he acquired the citizenship; or failing that, to start a legal process looking into his treasonous declarations and maybe requesting his extradition from the US.

As for his responses (which I won’t even quote here, go read the first article): not recognizing a country’s statehood is literally one step before not recognizing people’s humanity - this is more of a fascist gesture than Musk’s nazi salute and bears keeping an eye on and giving a strong response to, to guard Canada from attacks from antidemocratic terrorist fascists.

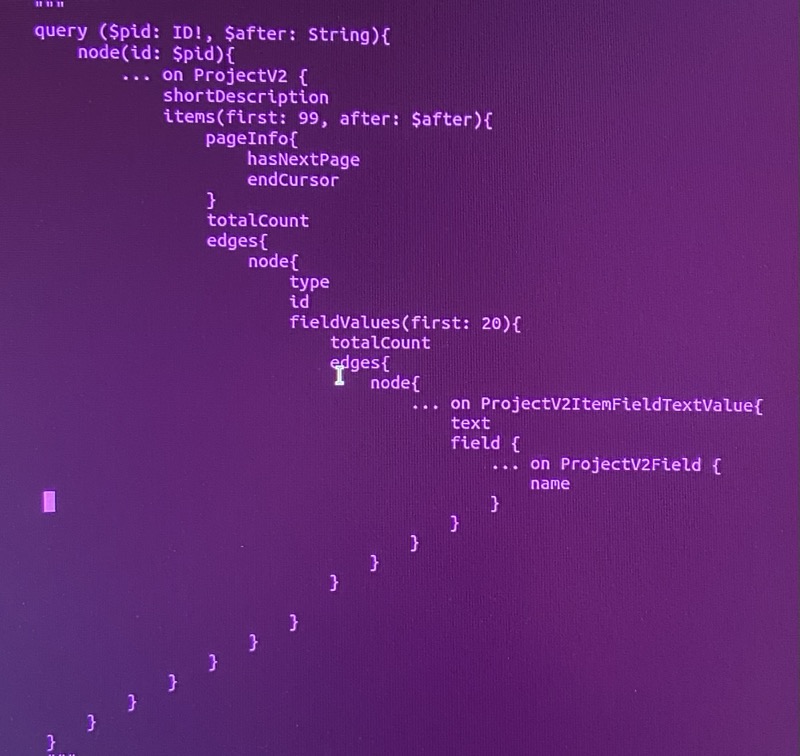

There’s a special place in hell for the deranged maniac who invented graphql.